Inverse Reinforcement Learning of Behavioral Models for Online-Adapting Navigation Strategies

Michael Herman, Volker Fischer, Tobias Gindele, Wolfram Burgard

2015 IEEE International Conference on Robotics and Automation, 2015, Seattle, WA, USA, May 26 – 30, 2015

Abstract:

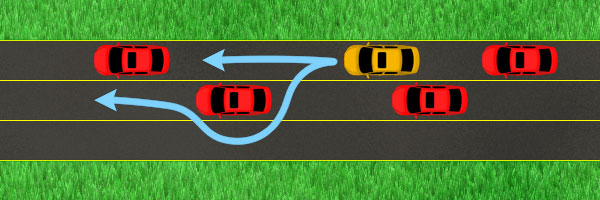

To increase the acceptance of autonomous systems in populated environments, it is indispensable to teach them social behavior. We would expect a social robot, which plans its motions among humans, to consider both the social acceptability of its behavior as well as task constraints, such as time limits. These requirements are often contradictory and therefore resulting in a trade-off. For example, a robot has to decide whether it is more important to quickly achieve its goal or to comply with social conventions, such as the proximity to humans, i.e., the robot has to react adaptively to task-specific priorities. In this paper, we present a method for priority-adaptive navigation of mobile autonomous systems, which optimizes the social acceptability of the behavior while meeting task constraints. We learn acceptability-dependent behavioral models from human demonstrations by using maximum entropy (MaxEnt) inverse reinforcement learning (IRL). These models are generative and describe the learned stochastic behavior. We choose the optimum behavioral model by maximizing the social acceptability under constraints on expected time-limits and reliabilities. This approach is evaluated in the context of driving behaviors based on the highway scenario of Levine et al. [1].

@INPROCEEDINGS{Herman2015ICRA,

author={Michael Herman and Volker Fischer and Tobias Gindele and Wolfram Burgard},

booktitle={2015 IEEE International Conference on Robotics and Automation (ICRA)},

title={Inverse Reinforcement Learning of Behavioral Models for Online-Adapting Navigation Strategies},

year={2015},

pages={3215-3222},

ISSN={1050-4729},

month={May},

}